I thought I’d finally wrap this up so I can move on to other things. Since I’ve last posted, I replaced my Jankinator 1000 (nVidia Tesla P40 with a water cooler) and my nVidia RTX 2060 with an Intel Arc A770. It has 16Gb of VRAM and is actually a pretty fast GPU on my Linux box.

So far I’ve had pretty good luck getting things like TensorFlow and PyTorch working on it, as mentioned in a previous blog post. The only thing so far that I haven’t gotten to work 100% is Facebook’s Segment Anything Model 2 (SAM2) (which of course is what I mentioned in the last post I wanted to try to use with the LIDAR GeoTIFF). Basically now down to running out of VRAM although I’m not sure exactly why since I’ve tweaked settings for OpenCL memory allocation on Linux, etc. I’ve finally given up on that one for now and decided to just use the CPU for processing.

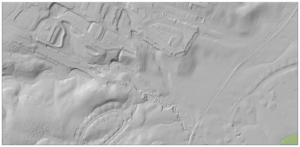

As a refresher, here is the LIDAR GeoTIFF I have been using.

SAM2 has the ability to automatically generate masks on input images. This was of interest to me since I wanted to test it to try to automatically identify areas of interest from LIDAR. Fortunately, the GitHub repo for SAM2 has a Jupyter notebook that made it easy to run some experiments.

With all of the default parameters, we can see that it only identified the lower right corner of the image. I tweaked a few of the settings and came up with this version:

The second image does show some areas highlighted. It got a grouping of row houses on the bottom of the image. It also found a few single family houses as well. However, it also flagged areas where there is really nothing of interest.

Finale

What have we learned from all of this? Well, LIDAR is hard. Automatically finding features of interest in LIDAR is also hard. We can get some decent results using image processing and/or deep learning techniques, but as with anything in the field, we are no where near 100%.

Previously I posted about training a custom RCNN to identify features of interest from GeoTIFF LIDAR. It was somewhat successful, although, as I said, it needed a lot more training data than I had available.

I do think that over time, people will develop models that do a good job of finding certain areas of interest in LIDAR. Some fields already have software to find specific features in LIDAR, especially in fields such as archaeology. And this is probably how things will continue for a while. We probably will not have a generalized “find everything of interest in this LIDAR image” model or software for a long time. However, it is possible to train a model to identify specific areas.

I am also working with LIDAR point data as well versus GeoTIFF versions. Point data is a lot different beast in that it has various classifications of the points after it has been processed. You can then do things such as extract tree canopy points or bare ground points. Conversion to a raster necessarily looses information as things have to be interpolated and reduced in order to produce a raster. I’ll post some things here in the future as while point cloud data can be harder to work with, I think the results are better than what can be obtained via rasters.