Some Examples

Now that we have some of the basics down, let us look at some practical examples of the differences between how the brain sees things versus how a computer does.

The above photo of a part of the sky was taken by my iPhone 13 Pro Max using the native camera application. There were no filters or anything else applied to it. To our eyes, it looks fairly uniform: mainly blue with some lighter blue towards the right where the sun was the day I took the picture. Each pixel of the image represents the light that hit a sensor in the camera, was processed, and saved.

Our brain does not see a number of individual pixels. Instead, we see large splotches of colors. This is one of the shortcuts our brain does to ease the processing burden. If you look around a room, you do not see individual differences between the colors of the wall. Your wall mainly looks like a uniform color. We simply do not have the processing power to break down the inputs from our eyes into every minute part.

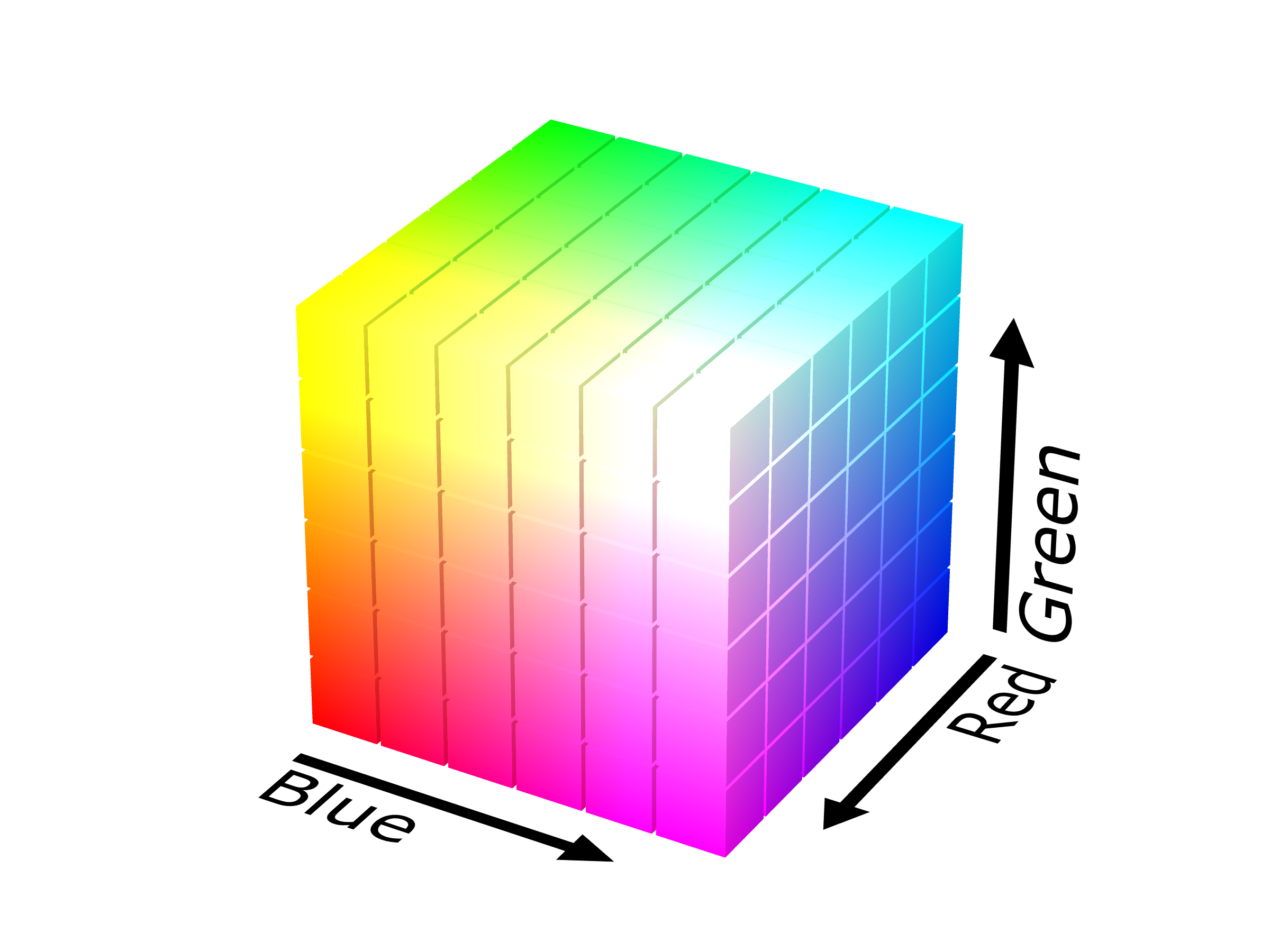

A computer, however, does have the ability to “see” an image in all of its different parts. Computers see everything as a number, be it the 1’s and 0’s of binary or color triplets in the RGB color space. If we look at the RGB color cube below, the computer sees all of the pixels in the above image as clustering somewhere around the lower right side of the cube. See the previous link for more information about the RGB color space.

In a computer, the above image is loaded and each pixel is in memory in the form of triplets such as (135, 206, 235), which is the code for a color known as sky blue. The computer also does not have to take any shortcuts when it loads the image, meaning that the representation in memory is exactly the same as the image that was saved from the phone.

If we use the OpenCV library to calculate the histogram of the image and then count the number of colors, we in fact find that there are 2,522 unique colors in the picture of the sky. There is no magic here, we just do not have the same precision that a computer does when it comes to examining images or our environment. The big take away here is this: there is more information encoded in pictures or video than what our brains are capable of perceiving. Just because we cannot see certain details in a image does not mean that they are not there.

For another example, consider this image below. The edges look like nothing but black, and all you can really see is out of the window. It is definitely underexposed.

As mentioned above, a computer is able to detect more than our eyes can. Where we just see black around the edges, there is in fact detail there. We can adjust the exposure on the image to brighten it so that our eyes can see these details.

With the exposure turned up (and adjusting the contrast as well), we can additionally see a picture of a bird, some dishes, and some cooking implements. This is not magic, nor is it adding anything to the image that was not already there. Image processing like this does not insert things into an image. It only enhances the details of an image so that they are more detectable to the human eye.

Many times, when image processing is in the news, people sometimes assume that it changing an image, or that it is inserting things that were not originally there. When you edit your images on your phone or tablet, you are manipulating the detail that is already in the image. You can enhance the contrast to make the image “pop.” You can change the color tone of the image to make it appear more warm or more cold to your liking. However, this is simply modifying the information that is already in the image to change how it appears to the human eye.

I am making a big deal about this point as future installments in this series will demonstrate how things actually work while hopefully dispelling certain myths that exist in pop culture. I think next time I will cover zooming in or out of an image (aka, resizing). Does it add something into the image or misrepresent it? We will find out.