OK I will admit it took me longer than I had planned to finish this up. Life got in the way. But now I think is a good time to finish up the series and move on to another.

In the first parts of this series, we looked at why English is such a challenging language for natural language processing (NLP), and how early methods like rules-based systems and Bag of Words models approached the problem. But like everything else, access to power GPU’s and AI has had a big impact on modern NLP techniques.

Today, NLP has evolved dramatically, powered by new methods that are far more powerful — and much better at handling the complexity and ambiguity of human language.

Let us look at some of the modern techniques that have reshaped NLP in recent years.

Word Embeddings: Giving Words Meaning

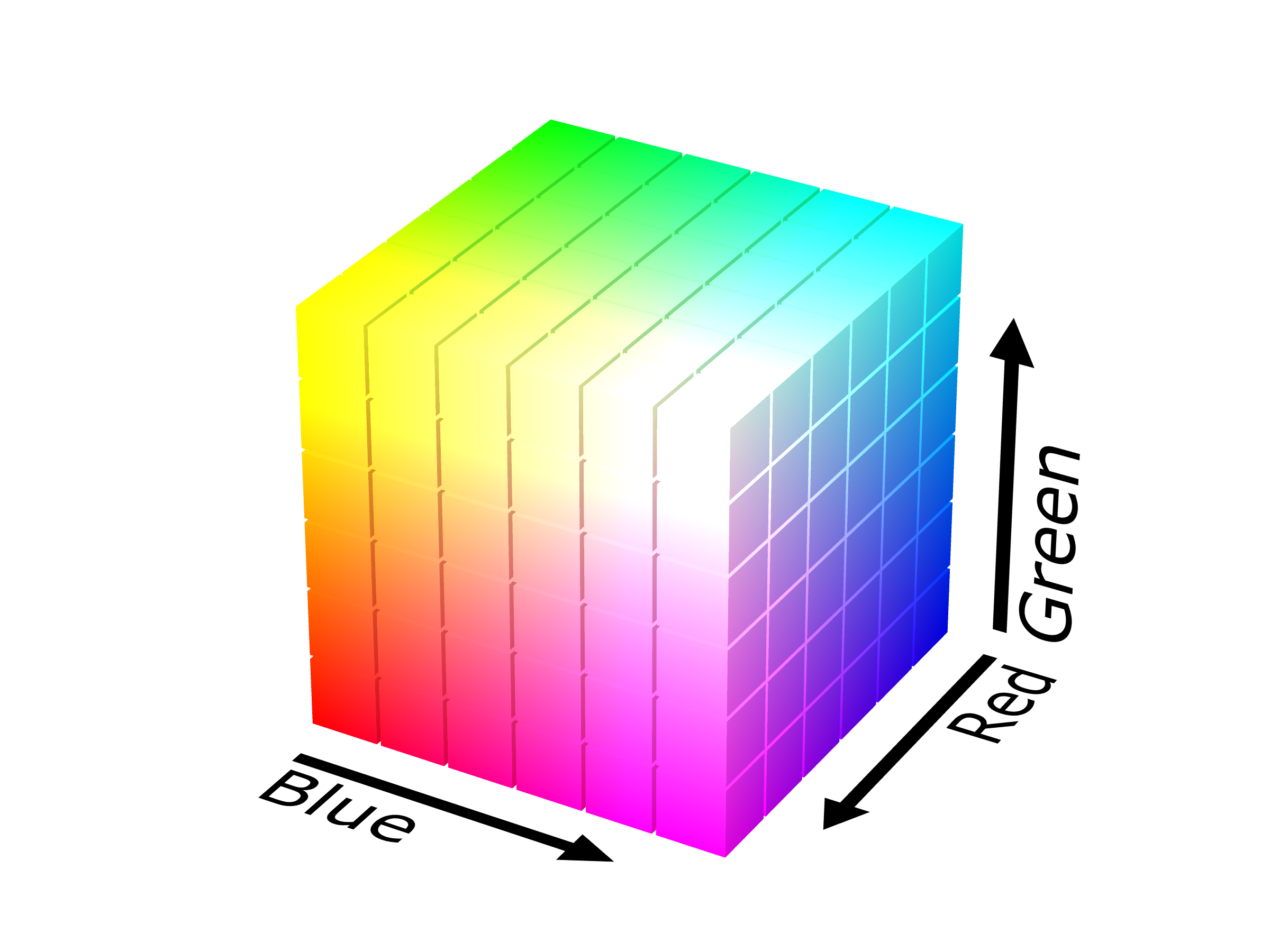

One major leap forward came with word embeddings — ways of representing words as vectors in a multi-dimensional space, where words with similar meanings are close together.

Unlike older methods that treated words as isolated tokens, embeddings like Word2Vec, GloVe, and FastText actually learned that words like “king” and “queen” are related, or that “Paris” and “France” have a strong connection.

These embeddings helped NLP systems recognize relationships and similarities between words — even when they were not identical — which made downstream tasks like translation, search, and classification much more accurate.

Let us dig a little deeper into how these things work by taking a look at Word2Vec. Word2Vec generates a vector representation of words in a multi-dimensional space. In this space, similar words end up being “closer” to one another. The idea behind it is that words that appear in similar contexts tend to have similar meanings.

Word2Vec actually generates a shallow neural network to learn the relationships between words. It typically has a dense hidden layer that takes generated embeddings from the inputs to predict the outputs. It does this in two different ways.

The first way is the Skip-Gram Model and is the most common method of generating the network. We select a word in the middle of the sentence and train the model to predict the nearby words within a certain window size. These word pairs (such as cat and furry) are fed into the network for training. The model learns to predict context words given an input word. A very simplified example of the network is given below.

Input Layer (one-hot encoding) : (cat, furry)

|

Hidden Layer (dense layer)

|

Output Layer (Softmax layer that outputs probabilities for words being a nearby word)

The second method is the Continuous Bag of Words model. Here the model is trained by giving it context words and it then tries to predict the target word. It can be thought of as a reverse of the previous technique. It uses a fixed-sized window of context words around the target word. So if our target word in a sentence is cat, we could have context words of furry and purrs. Another of my masterful graphic arts shows how this works below.

Input Layer (words are one-hot encoded, averaged into an input vector, and the vectors summed depending on the implementation)

|

Hidden Layer (dense layer)

|

Output Layer (Softmax layer that outputs probabilities of a single word being the target word)

But traditional embeddings had one big weakness: each word only had one vector, no matter how it was used. “Bank” always meant the same thing, whether you were talking about rivers or money.

That led to the next big innovation: contextual embeddings.

Contextual Embeddings: Understanding Words in Context

With models like ELMo and BERT, NLP systems moved beyond static word meanings. Now, the context of a word — the words around it — could change its meaning. If you wrote “he deposited cash at the bank” versus “they sat by the river bank,” modern models could understand that bank means two very different things.

This made a huge difference in tasks like question answering, translation, and search, where understanding the nuance of a sentence is critical.

Transformers: The Engine Behind Modern NLP

All of this was made possible by a groundbreaking architecture introduced in 2017: the Transformer.

Transformers, introduced in the paper Attention is All You Need, replaced older models like RNNs and LSTMs by doing something surprisingly simple: instead of reading words one by one, they looked at the entire sentence (or even paragraph) all at once.

At the heart of transformers is the attention mechanism, which lets the model figure out which words in a sentence are most important when trying to understand a given word.

This means a model can understand relationships across an entire sentence — or even multiple sentences — no matter how far apart the words are.

For an example, we will look at how BERT (Bi-directional Encoder Representations from Transformers) works. BERT is an encoder only transformer architecture that consists of four main modules:

- The Tokenizer Module converts the words in a sentence into a series of tokens.

- The Embeddings Module converts the tokens into embeddings.

- The Encoder Module is a stack of Transformer blocks that use self-attention without causal masking. Self-attention here basically means the blocks determine the relative importance of component in a sequence relative to the other components of the sentence. This lets the model learn the relationships between words no matter where they appear in a sentence.

- The Task-Head Module uses the final embeddings to predict outputs, such as masked words or next sentence predictions, typically through a classification layer.

BERT processes the entire sentence at once, verses other methods that look at text sequentially. As a whole model, BERT is trained and fine-tuned using two unsupervised-learning tasks.

- Masked Language Modeling (MLM) masks random words in a sentence and the model learns how to predict the masked words based on the context provided by the other words in the sentence.

- Next Sentence Prediction (NSP) works by giving the model pairs of sentences and it learns to predict whether or not the second sentence logically follows the first.

Pre-trained Language Models: A Giant Leap

Once transformers became popular, researchers realized they could pre-train huge language models on massive amounts of text — and then fine-tune them for specific tasks.

Instead of training a model from scratch every time you wanted to do translation, or summarization, or classification, you could just start with a giant model that already knew a lot about language, and tweak it slightly.

Some of the most important pre-trained models today include:

- BERT: A transformer-based model that reads text bidirectionally, learning to predict missing words.

- GPT (Generative Pre-trained Transformer): A model that learns by predicting the next word in a sequence, leading to natural text generation (and later, chatbots).

- T5 (Text-to-Text Transfer Transformer): A model that frames everything as a text generation task, from translation to summarization.

These models have powered major advances in search engines, customer support chatbots, translation apps, and even tools like ChatGPT. Let us look at GPTs as an example of these models.

GPTs are trained by a huge input corpus such as text books, Wikipedia, blogs, websites, and other sources. With the word Transformers in the sentence, we know that they will use Transformers to process the training data. As the input data is fed into the model, it uses attention and self-attention to consider the context of the word in a sentence based on all of the other words. This self-supervised learning approach lets the model learn from vast amounts of unlabeled text, though the training data is often filtered for quality.

When you give a GPT a prompt, it will first break the sentences you type in down and generate embeddings of the sentences. It passes these embeddings through the model architecture to understand the relationships between the words for their meanings.

The Generative part creates output text by computing probable sentences and their order to generate a coherent output. The key here is that the output is entirely based on probabilities. As your input text goes through the model, it predicts output that it thinks matches your input by generating information that is inside the model that is “close” to the meaning of your input prompt. It may seem like the model understands what you have said, but in the end everything is based on probability. This is also why GPTs can hallucinate, where the model can incorrectly predict output that makes no sense or has incorrect information.

Challenges Still Remain

While modern NLP has come a long way, it’s far from perfect. Some of the challenges include:

- Bias in training data: Large language models can reflect the biases of the data they were trained on.

- Understanding rare or low-resource languages: Most models are still strongest in English and other major languages.

- Cost and energy usage: Training massive models requires enormous amounts of computing power.

Researchers are actively working on these issues, but they show that even with today’s powerful tools, the complexity of human language is still a hard problem.

Wrapping Up

From handcrafted rules to massive transformers, NLP has evolved faster in the last five years than almost any other field in AI.

While English — with its ambiguity, irregular grammar, and endless exceptions — remains a tough language for machines to master, the combination of contextual understanding, transformers, and pre-trained models has made it possible to do things that seemed like science fiction just a decade ago.

The future of NLP is even more exciting, with research moving toward multilingual models, more efficient architectures, and even models that can understand images, sounds, and language together.

Thanks for joining me on (and waiting on me to finish) this quick tour through the world of NLP. Until next time.